6 Methods To Prioritize Features

There are many ways to figure out what to work on first, second, and last. Over the decades, some people have built frameworks around this problem—here are six of those.

👋 Hey, it’s András here! Every two weeks, I write about product management concepts, hot takes, and frameworks to help you build better products. Subscribe to get each article delivered to you when it’s published!

Have you ever said “no” to a new request coming from a colleague? Congratulations! That means you have prioritized something over something else.

For digital products, feature needs are usually pouring in from many internal and external stakeholders — end-users, investors, or internal departments, just to name a few. To keep things focused, you might need a clear method ofo getting your features in order.

Below you’ll find 6 techniques that approach feature prioritization from different perspectives. Some will give you a score to rank your items, while others will make you realize why one feature may be more important than others. The following list is mainly intended for product people, but regardless of your job title, you might find some of these methods useful for prioritizing work. So let’s dive in!

1. Kano model

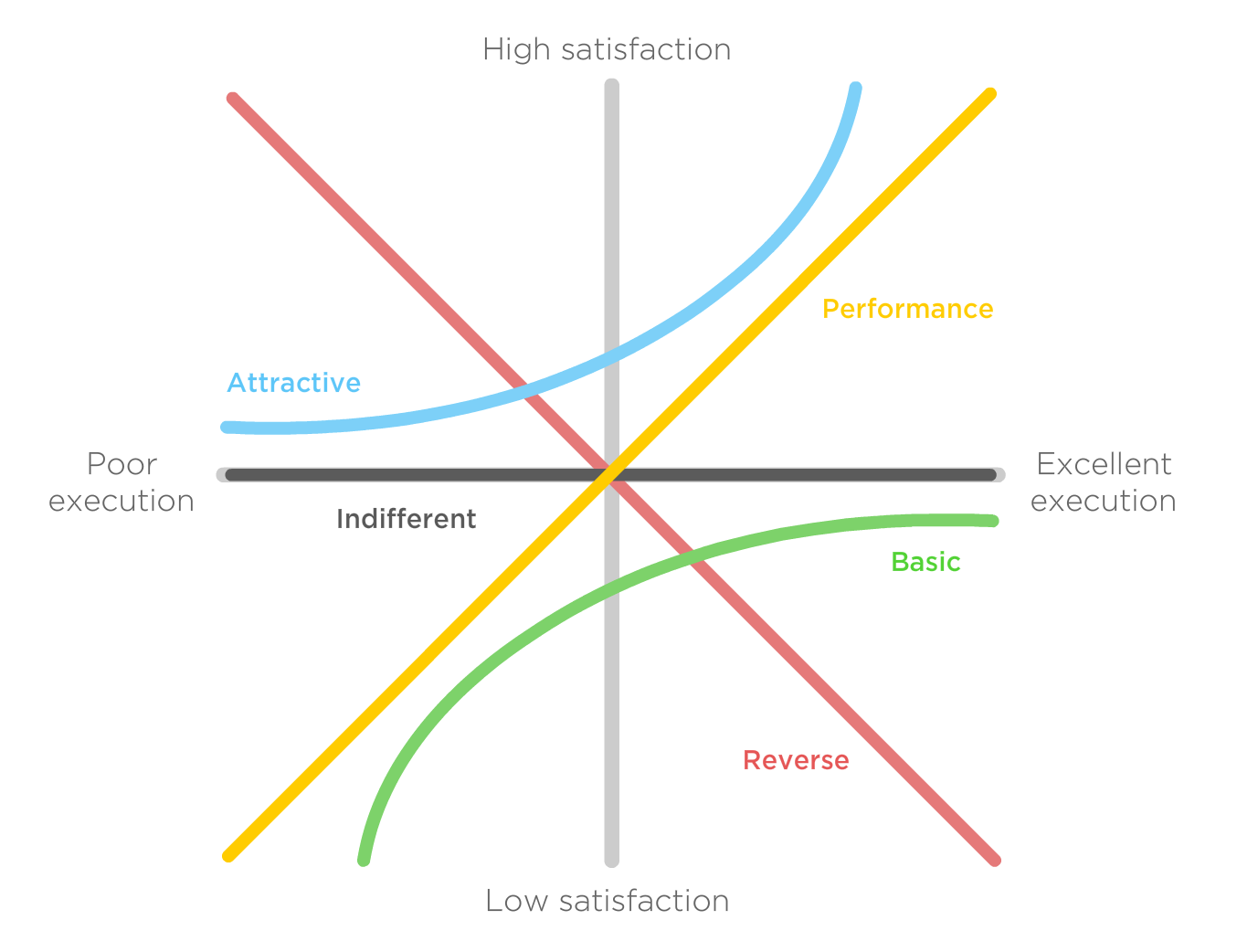

Developed in the 1980s by Professor Noriaki Kano, this method classifies customer preferences into five categories:

Basic: Customers expect these features, and they’re taken for granted, something which every product has in the same category. When this is done well, customers are neutral, but when done poorly, it causes dissatisfaction. Example: voice calling functionality in a smartphone

Performance: These features generate satisfaction when fulfilled and dissatisfaction when they are not. More is generally better and will result in a more valuable product. Example: the range of electric cars

Excitement: Features that are not expected but which cause a positive reaction when present. The lack of these does not cause user dissatisfaction but can increase the overall satisfaction with a product. Example: animated fireworks when you have completed a task

Indifferent: Features that make us feel indifferent. These do not result in either satisfaction or dissatisfaction, and in most cases, it is not worth it to develop them further. Example: the thickness of a milk carton

Reverse: The absence of these features can cause satisfaction, while their presence will surely cause dissatisfaction. These are misguided features that frustrate customers, so one should avoid building them. Example: using excessive scripts when communicating with customers in a call center

The five categories can be displayed on the following graph, where one axis represents the level of satisfaction while the other the level of execution.

Kano questionnaire

After inputting the dimensions and the categories of features in this model, we can now turn to our users to ask two questions regarding the feature we would like to evaluate:

“How do you feel if you have the feature?”

— which would be our functional question“How do you feel if you don’t have the feature?”

— which would be our dysfunctional question

The questions are not open-ended, and they do require specific answers. These were not designed to offer a rating but to provide a sense of expectation. The possible answers are…

I like it

I expect it

I don’t care

I can live with it

I dislike it

Evaluating

Based on the answers to the functional and dysfunctional questions, the following matrix is used to evaluate the features. As a whole, the answers denote which of the five categories the feature belongs to (Basic, Performance, Excitement, Indifferent, Reverse).

Please note that there are two blocks with a question mark, which are the questionable answers, where one answer contradicts the other, therefore it cannot be graded in this case.

While the model won’t generate the precise order of our backlog items, it might help to uncover some previously unmapped user needs facilitating more thoughtful planning of features in the future.

Further watching: Building a UX Strategy Using the Kano Model by Jared Spool

2. (R)ICE method

The RICE method consists of four factors: Reach, Impact, Confidence, and Effort. This method is used to rank features and calculate a score from these four factors to help in prioritization. There is an alternative version of this method called ICE, which does not utilize the “Reach” variable.

Reach: Measured in the number of users/events within a specific time period who will be affected by the feature. This period can be, for example, customers per quarter or transactions per month.

Impact: How much value a feature can generate. Value is often a bit subjective, but it’s best if we can create an internal model on how to use it. Two good ways to do that is:

1.) to align an impact score based on how much a feature supports key company initiatives in a given period (like increasing the number of subscriptions), or

2.) to have predefined values for high (5), medium (3), and low-impact (1) functionalities and agree internally on what those values should cover.Confidence: Helps you to factor in how much an idea is supported by data or how much it comes from your “gut feeling”. If you have a great idea for a big feature, but it’s not supported by any research, then it helps you balance out your enthusiasm in the equation.

Effort: How much time will it require from all members of your team (not just development but research and product design) to deliver the feature. As with Impact, it’s best to define a specific internal standard, whether you’ll be counting this in hours, days, or months.

How is the score calculated?

RICE is used to compare features and projects within a single organization or department, not between companies. With this in mind, it’s important to keep the scoring consistent over time. If you used 1, 3, and 5 for the Impact field half a year ago, it doesn’t make sense to use 20 or 50 today.

Sean McBride of Intercom gives a good example in his article on how his organization is using the RICE method, having fixed choices for Impact and Confidence:

Reach: how many people will this impact?

(Estimate within a defined time period.)Impact: how much will this impact each person?

(Massive = 3x, High = 2x, Medium = 1x, Low = 0.5x, Minimal = 0.25x.)Confidence: how confident are you in your estimates?

(High = 100%, Medium = 80%, Low = 50%.)Effort: how many “person-months” will this take?

(Use whole numbers and minimum of half a month — don’t get into the weeds of estimation.)

There are many ways to balance the score, so find something that works for you.

3. Effort vs. Impact matrix (or Value vs. Complexity)

A rather simple prioritization method is the “effort vs. impact” matrix, alternatively known as “value vs. complexity”. This method has only two variables, and features are plotted along these two axes.

Impact: The impact of new functionality. This variable is subjective, the best approach being to have an internal consensus on how to provide this. One way to do this is to define a range of integers, say from 1 to 25. Within this range, there is no medium value (12.5 is not an integer), so no feature will be stuck between two categories (see illustration below).

Effort: The effort required to deliver the new functionality. This sums up the effort of the development team, UX design, and research. You can either estimate with a time frame here (hours, days, months — depending on the scale of features your team is developing), or standardize it the same way as to impact a range of integers, like from 1 to 25.

If you’re using relative estimation on any axes, it’s worth isolating some examples from the past that can demonstrate the smallest and the largest amounts of work. If you had a small copy change on a button that can be 1, but the biggest redesign/refactor project you remember should then be 25.

After coming up with the Effort and Impact score, you can divide your matrix into four areas, falling into the following categories:

Thankless Tasks: These tasks would result in low impact but require a high degree of effort from your team. Some articles describe these as “Not worth it” or “Money pit”. While I agree that these are the things that you should spend the least amount of time on, there might be technical enablers hiding in them that can be useful.

Major Projects: Tasks that have a high impact but also require high levels of effort. Usability redesigns and new major functionalities often fall into this bucket. These are worth doing if you have the time and resources, and you’re certain that such a feature will improve some metrics.

Fill Ins: Low impact and low effort. These are the tasks you can attack when your team is idle, as they are usually quick fixes in your application.

Quick Wins: The best of the four categories, is when something requires only a low degree of effort but can have a high impact on your product. This is the category you should focus on first, the “low-hanging fruit”. Depending on your product, you might not find many features here, but the ones landing here are usually worth doing.

While the Effort vs. Impact matrix is an easy concept to use, it requires discipline to keep it consistent and a common understanding among various stakeholders.

4. MoSCoW method

This prioritization technique was developed by Dai Clegg and is mostly used in software development. The method puts needs into four buckets based on their order of importance — which make up the acronym for its name:

Must have: Functionalities that must be delivered within a time frame to declare success. Even if one of the requirements from this category is not delivered, the delivery should be considered a failure.

Should have: Such classified needs are important but are not necessary for success. Items here eventually have to be delivered, but they’re not as time-sensitive as the Must-haves above.

Could have: Functionalities in this bucket are usually small enhancements that could improve a product but are not essential. They’re less important than the previous two categories but can be implemented if time permits.

Won’t have: Items here have the lowest importance. They either don’t match the current challenges of product users or are deemed to be the least critical.

The method is best used to prioritize time-sensitive requirements within a fixed timeframe, making sure the most important items get delivered first. With this in mind, it’s important to note that the most common critique of the technique is that it doesn’t distinguish between items within the same category.

Further reading: The power of the MoSCoW method by René Morency

5. WSJF prioritization

Weighted Shortest Job First (WSJF) is a prioritization method by Don Reinertsen which is often connected to the Scaled Agile Framework. It’s technically an equation with four components for ranking features and epics.

The first component group is the Cost of Delay, which comes from:

User-Business Value: Relative value to the customer or to the business itself. This is subjective and can be connected to revenue, impact or other metrics. It works best if we have an internal consensus on how to use it, like defining a specific range (from 1 to 10) or parts of the Fibonacci sequence. You should also make sure the values given are comparable, and stakeholders are not just choosing the highest available number.

Time Criticality: Indicates how critical a certain feature is. Is there a specific external deadline? Is it a legal requirement? Are those the features that competitors are also considering building?

Risk Reduction and/or Opportunity Enablement: Does the feature mitigate a risk (like hardware failure), or enable a new opportunity (entry into an additional market)?

All these components together add up to the Cost of Delay:

When considering the available range for these components, it’s important to balance them out somewhat to make it work. Imagine if the user-business value were from 1 to 100, but the other two components only ranged from 1 to 5. Then the business value would dominate the equation.

The bottom part of the formula is the Job Duration or Job Size. It’s for estimating how much effort the development is. If your team is new to size estimation, then I recommend using T-shirt sizing, or the bucket system. If you already know how much time it takes to mow a 50 m² lawn, then you might have an idea of how much effort is needed to cut 150 m².

Once you have both main components, then you’re able to calculate the Weighted Shortest Job First (WSJF) score. As the name implies, it prioritizes quick wins over big projects, and the higher score you have for a feature, the higher it should sit on your backlog — at least in theory.

Having this score calculated should greatly help you to prioritize your backlog, but it’s not one of the simplest methods. It requires talking to your stakeholders, development team and coordinating the effort to collect these inputs for the calculation.

Further reading: WSJF on scaledagileframework.com

6. Eisenhower matrix

“Who can define for us with accuracy the difference between the long and short term! Especially whenever our affairs seem to be in crisis, we are almost compelled to give our first attention to the urgent present rather than to the important future.“— Dwight D. Eisenhower

Dwight D. Eisenhower achieved a lot during his two terms as the 34th US president. While the Eisenhower matrix is originally about task prioritization, it can also be a simple method of prioritizing features.

The matrix consists of four quadrants based on importance and urgency:

Do: Urgent and important things. Items that cannot wait, potentially having clear deadlines and consequences for not taking immediate action.

Schedule: Important but not urgent items. Features that bring your product closer to its goals but are not mandatory and can be deferred until later.

Delegate: Urgent but not important items. Requirements that need to be addressed in a short time, but somehow are still not important enough to be put into the “Do” bucket. You can ask yourself here, is there another product area which should own this?

Eliminate: Neither urgent nor important features. These are the things you should eliminate, at least from your short-term planning.

Urgent items are those which require your short-term attention. These are visible challenges that pop up on a daily or weekly basis. Urgent tasks are unavoidable, so you should stop putting out fires and focus on them first.

Important items contribute to your long-term success. Quoting the Pareto principle, 80% of the outcomes come from 20% of the efforts. If you focus on a few key items, those may bring most of the results.

The Eisenhower matrix is somewhat similar to the MoSCoW method outlined above. In both techniques, you’re putting items into four buckets that you rank by priority. One thing that is a benefit here is that you can differentiate among items within the same category. By defining the exact levels of urgency and priority, you’ll have a clearer picture of where to start.

Thanks for tagging along until the end!

While all organizations have different needs, hopefully, you’ve learned something useful about the six prioritization methods above.

There are plenty more techniques out there, so if you didn’t quite find what you were looking for, keep exploring!